About Me

I am a Lead Data Scientist at Legal & General and a Visiting Researcher at University College London (UCL), where my research focuses on Generative and Responsible AI. Previously, I was a Lecturer in Data Science and Signal Processing in the Department of Electronic and Electrical Engineering at UCL.

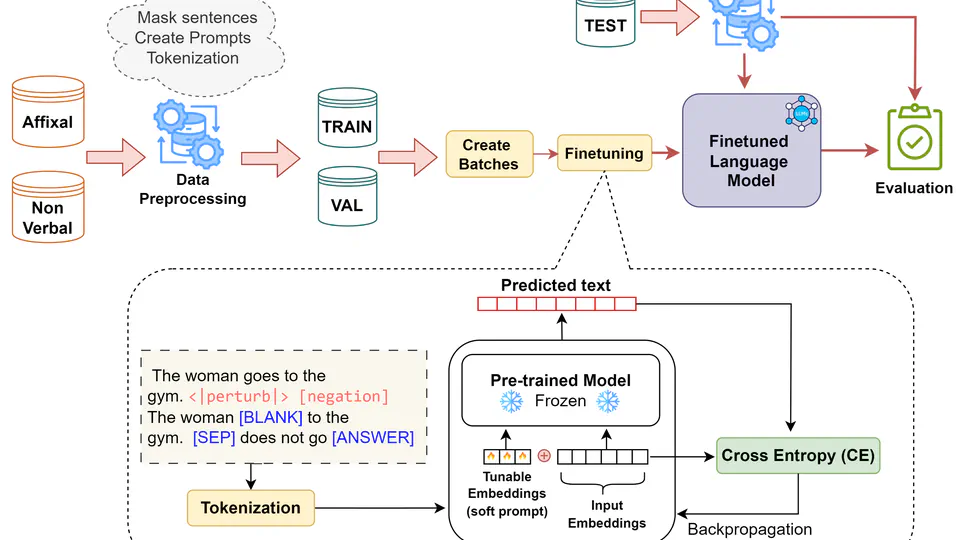

- Generative AI

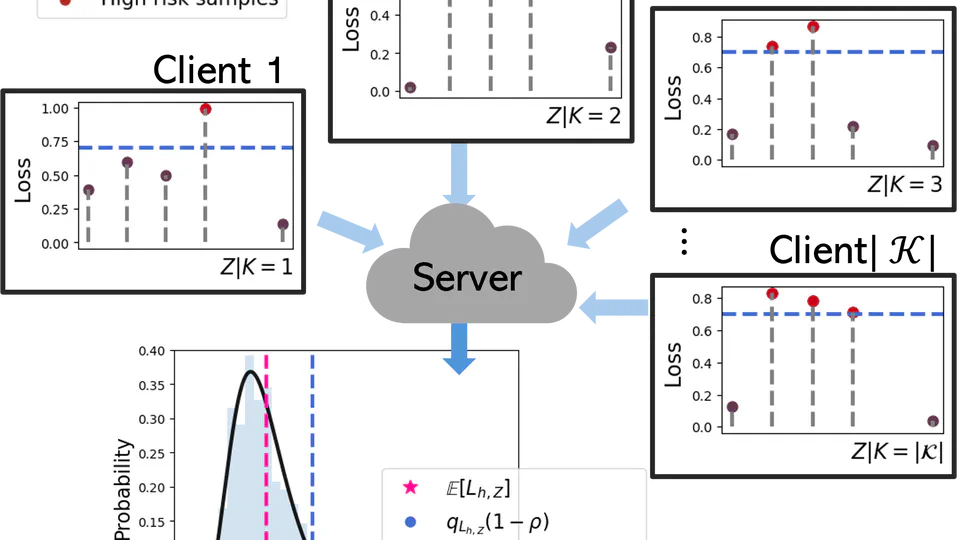

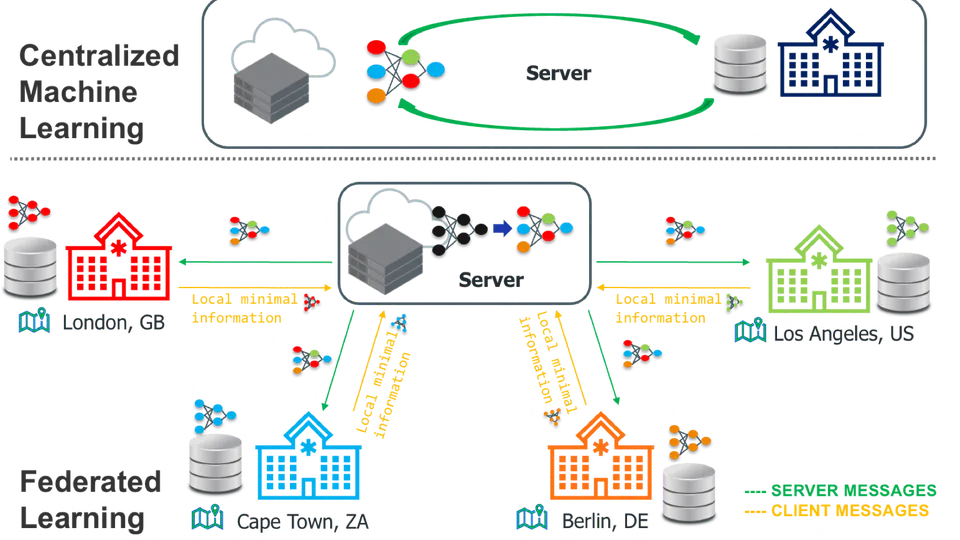

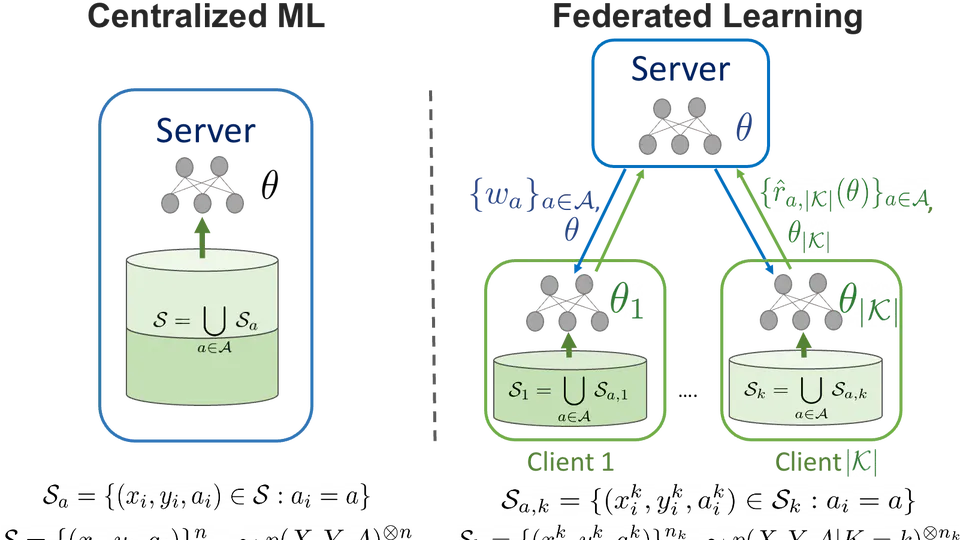

- Federated Learning

- Responsible AI (Fairness, Robustness, Safety, Privacy)

PhD in Artificial Intelligence

University College London

MSc in Internet Engineering

University College London

BSc Digital Systems

University of Piraeus

At Legal & General, my work focuses on designing and developing generative and agentic AI systems for a range of applications — including question answering, content tagging and classification, text generation, and web search summarisation — applied across financial and enterprise domains.

In parallel, my research at UCL and through independent projects focuses on building efficient and adaptable methods to enhance robustness, predictive performance, and scalability across a broad spectrum of generative AI applications. I also work on approaches that support Responsible AI in distributed and heterogeneous environments, with a particular focus on federated learning and multi-agent systems.

If you’re working on related problems or are interested in collaboration, feel free to reach out 😃